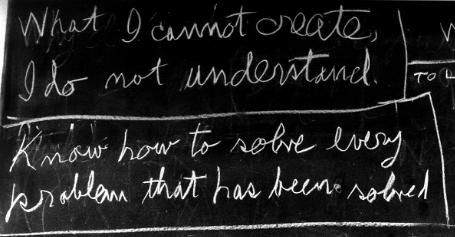

“What I cannot create, I do not understand.” Richard Feynman, one of the clearest thinkers in the history of science, left this statement on his final blackboard.

That statement is incredibly useful in data science, data engineering, and software engineering, where problems are complex, and the process of building a solution reveals all the little questions that one must answer to solve the main problem. I’ve built hundreds of analyses over the past decade, and along the way, I’ve kept running into similar little questions:

- What does this column mean?

- How were the records in this data set collected?

- Why did the data-collecting organization decide this data was worth collecting?

- Who used this data, and for what?

Solving these little questions was and remains integral to understanding data correctly, but it often takes an incredible amount of time and work to reach reliable, well-founded answers. These little answers are extremely useful when trying to solve related big problems! Still, without some way to package the little answers (the metadata) with the data set that gives them context and effective ways to search through data and metadata, a lot of the value of answering the little questions is lost and will inevitably be duplicated by others.

I’ve been exploring this larger problem for a few years now, prototyping solutions to subproblems, improving my understanding of the problem by discovering the pitfalls and complexities those solutions create downstream, and iterating on the entire design, all while keeping an eye on the tools other people are building to solve similar issues. After a number of iterations, I have a fairly clear understanding of the functionality this system, this data analysis platform, needs to capture more of the value produced through analysis.

A data analysis platform should include the following key components:

- Data storage: A place to store and manage large amounts of structured and unstructured data from various sources. This could include a data warehouse, data lake, data mesh, or other types of data storage systems.

- Data collection and integration: A way to collect, ingest, and process data from different sources, such as transactional systems, log files, and external data sources.

- Data modeling: A way to organize and structure data for efficient querying and analysis. In practice, this means ways to define relationships between different data tables and reorganize columns so tables reflect units that usefully represent concepts relevant to the business logic.

- Data visualization and reporting: A way to easily explore, analyze, and communicate findings from data, such as through interactive dashboards, charts, and reports.

- Data governance and security: A way to ensure the data is accurate, complete, and secure, such as through data quality checks and access controls.

- Data discovery: A way to search through available data assets, such as through a search interface, tags, data catalogs, data dictionaries, and lineage diagrams.

- Data collaboration and sharing: A way to share data and insights with different teams and departments, such as through data portals, data catalogs, and collaboration tools.

- Machine learning and Advanced analytics: A way to gain insights from data by tracking machine learning experiments.

- Feature engineering: A way to engineer new features or columns based on existing columns, such as through geocoding, clustering or categorization, scaling, and aggregation.

- Scalability and performance: A way to handle large amounts of data and support high-performance querying and analysis, such as through distributed computing and in-memory processing.

- Orchestration: A way to automate and coordinate the execution of complex pipelines spanning across multiple different systems.

- Documentation: A way for data engineers and scientists to learn how to add to the platform, such as by integrating new data sources, engineering new features, setting new expectations for data, adding discoveries to dashboards, or updating metadata.

The data space is still maturing, but it has reached the point where there are robust open-source projects that handle subsets of these requirements and can be assembled into a system that satisfies all of these specs. My current implementation uses:

- PostgreSQL + PostGIS for data storage,

- python code for data collection and integration,

- dbt for data modeling, feature engineering, and data discovery,

- great_expectations for quality monitoring,

- pgAdmin4 for database access control and administration,

- Airflow for orchestration,

- material-for-mkdocs and github-actions for documentation, and

- Docker with Compose to configure and run all of this infrastructure.

On the roadmap, I’m exploring data collaboration tools and data visialization/BI tools, so stay tuned for future updates.